How to Integrate AI Ethics and Explainability into Your PDF Redaction Workflow

How to Integrate AI Ethics and Explainability into Your PDF Redaction Workflow

Picture this: Your legal team rushes to file a critical document, confident that your AI redaction tool has protected all sensitive client data. Three months later, you discover it missed redacting a competitor's confidential information—and now you're facing regulatory scrutiny, damaged partnerships, and a compliance nightmare you can't explain. This isn't hypothetical. Meta faced exactly this crisis when their redaction process failed during a high-profile antitrust trial, exposing data from Apple, Snap, and Google. The worst part? They couldn't explain why their system failed.

As AI-powered redaction tools become standard in 2025, they're transforming how we handle sensitive documents—but they're also raising critical questions about transparency, bias, and accountability. When algorithms decide what information to hide or preserve, who's responsible when things go wrong? How do you prove your redaction choices were fair and unbiased? This guide reveals how to build ethical AI practices into your PDF redaction workflow, ensuring your systems not only protect sensitive data but can explain and defend every decision they make.

Understanding AI Ethics in Document Redaction: Core Principles You Need to Know

When AI algorithms start making decisions about what sensitive information to hide or keep in your documents, ethical considerations become critical. Think of it like giving someone the keys to your filing cabinet—you need to trust they'll handle everything responsibly. According to SS&C Blue Prism, five core principles form the foundation of responsible AI: fairness and inclusiveness, privacy and security, transparency, accountability, and reliability and safety.

![]()

Fairness ensures your AI doesn't discriminate when identifying sensitive data—it should treat a manager's salary information the same way it treats an intern's. Transparency means understanding how the algorithm decides what needs redacting. You wouldn't hire someone to review confidential documents without knowing their process, right?

Accountability establishes who's responsible when things go wrong. If your AI misses redacting a social security number, there must be clear ownership. Privacy and security protect the very data you're trying to safeguard, while reliability and safety ensure consistent, predictable performance. Research on ethical AI decision-making highlights how biased algorithms can pose serious risks to fair treatment.

For practical implementation, tools like Smallpdf's Redact PDF demonstrate these principles in action. Their platform combines TLS encryption with GDPR compliance, automatically deleting files after processing—showcasing privacy and security working together. The drag-and-drop interface provides transparency, letting you see exactly what's being redacted before permanent changes apply, ensuring accountability remains firmly in human hands.

The Explainability Problem: Why Your Redaction AI Needs to Show Its Work

Imagine defending a legal decision about what you redacted—only to realize you can't explain why your AI flagged certain information as sensitive. That's the nightmare scenario facing organizations in 2025, as regulatory frameworks increasingly demand transparency from AI systems handling sensitive data.

Meta's redaction disaster during a high-profile antitrust trial perfectly illustrates this problem. When their redaction process failed to properly protect competitor data from Apple, Snap, and Google, the lack of explainability made it impossible to determine whether the failure was human error, technical malfunction, or flawed AI logic. The result? Damaged partnerships, regulatory scrutiny, and a tech-wide trust crisis.

Why Explainability Matters for Compliance

According to Case Studies in Explainable AI, leading institutions like JPMorgan Chase leverage techniques such as SHAP and LIME to explain their AI decision-making processes—a practice now becoming mandatory for document redaction. The EU AI Act requires documented bias testing and continuous monitoring for high-risk AI systems, while Colorado's laws mandate that deployers provide principal reasons for adverse decisions to affected parties.

Here's what happens when your redaction AI can't explain itself:

- Legal vulnerability: Inability to defend redaction choices in court proceedings

- Compliance failures: Regulatory penalties for not meeting transparency requirements

- Audit nightmares: No documentation trail showing how sensitive data was identified

- Civil liability: As recent regulatory trends show, compliance failures now trigger private litigation beyond regulatory enforcement

Modern tools like Smallpdf's Redact PDF address this by providing clear documentation of what's being redacted and why, ensuring permanent removal that can be verified and explained—crucial for maintaining both security and defensibility in 2025's transparency-focused regulatory landscape.

Implementing an Ethical AI Framework for Your PDF Redaction Workflow

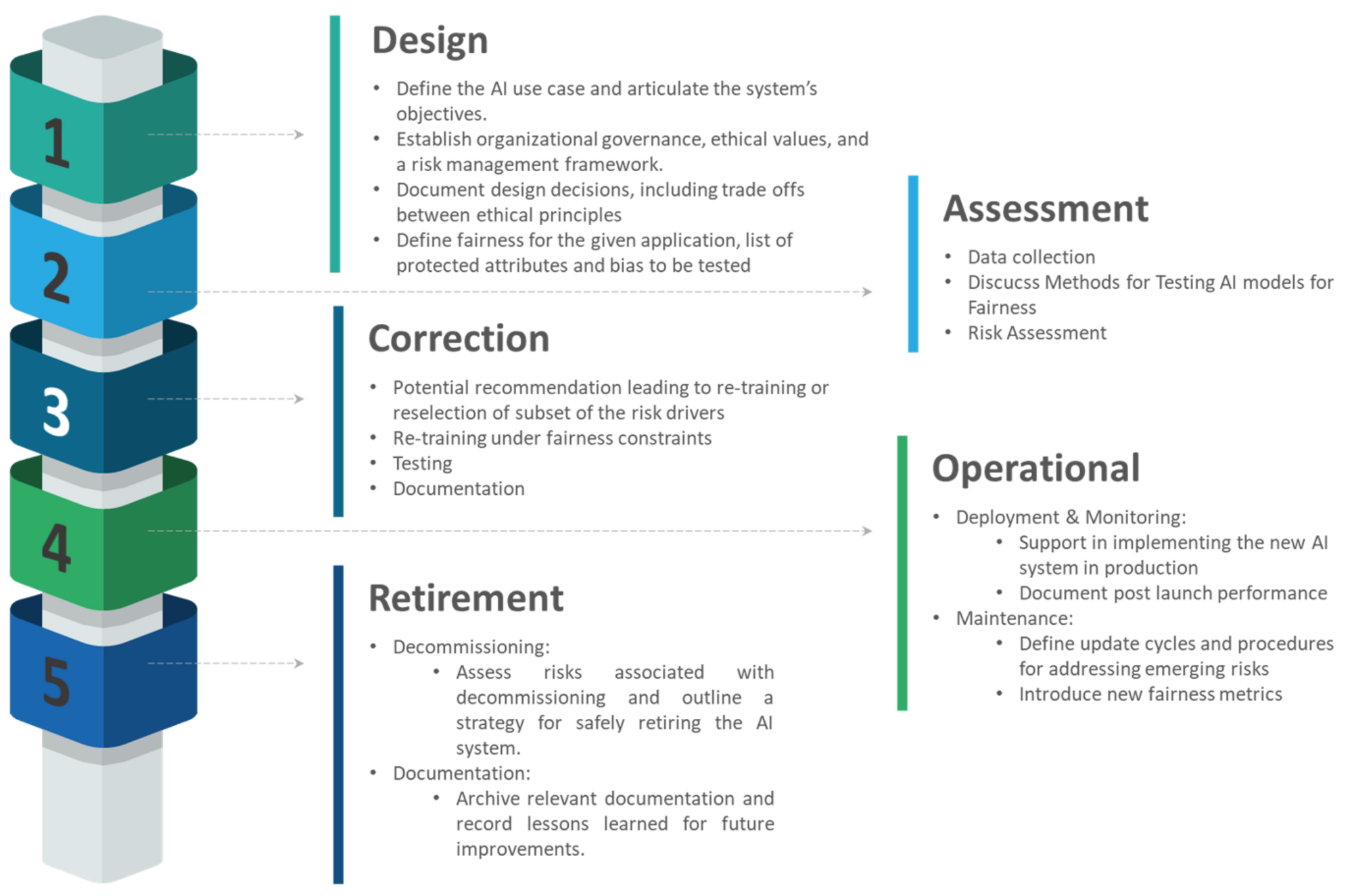

Building an ethical AI framework isn't just about checking compliance boxes—it's about creating a system that your team can trust and that actually protects sensitive information. According to ISACA's Artificial Intelligence Audit Toolkit, organizations need a three-line defense strategy: identifying risks, designing policies, and testing control effectiveness.

Start with a comprehensive bias audit. The European Data Protection Board's AI Auditing Checklist identifies critical moments where bias can creep into your workflow—from how you select training data to how redaction decisions get deployed. Think of it like checking your car's blind spots: historical bias, label bias, and deployment bias all need regular monitoring.

For practical implementation, TheIntellify's Responsible AI Guide recommends establishing cross-functional governance teams that include legal, IT, and business stakeholders. Your framework should document data quality assurance procedures, model validation protocols, and clear escalation paths when edge cases arise.

Consider tools like Smallpdf's Redact PDF, which combines AI-powered detection with human oversight—a practical example of responsible AI in action. The platform uses TLS encryption and GDPR-compliant processing while ensuring that redactions are permanent and unrecoverable, demonstrating how ethical principles translate into technical safeguards.

According to Teqfocus's GenAI Governance Checklist, continuous monitoring is non-negotiable. Set up regular audits that test for data drift, accuracy degradation, and unintended bias patterns. One government agency found that quarterly compliance audits helped them catch potential fairness issues before they impacted citizens—prevention beats remediation every time.

How to Integrate AI Ethics and Explainability into Your PDF Redaction Workflow

Picture this: You're defending a legal case when opposing counsel asks a simple question—"How did your AI decide what to redact?" You freeze. Your sophisticated algorithm worked perfectly, but you can't explain why it flagged certain information as sensitive. This isn't a hypothetical nightmare anymore. In 2025, organizations face mounting regulatory pressure to explain their AI's decision-making, especially when handling sensitive documents. Meta learned this lesson the hard way during their antitrust trial, when unexplained redaction failures exposed competitor data from Apple, Snap, and Google—triggering partnership damage and regulatory scrutiny. The message is clear: implementing AI-powered redaction without ethical frameworks and explainability isn't just risky—it's becoming illegal. This guide walks you through integrating the core principles of ethical AI into your PDF redaction workflow, from establishing fairness metrics to building audit-ready systems that can actually show their work.

Common Pitfalls and How to Avoid Them: Bias, Over-Redaction, and Under-Redaction

When implementing AI-powered PDF redaction, three critical challenges threaten both compliance and usability: algorithmic bias, over-zealous redaction, and dangerous under-redaction. According to research on bias in artificial intelligence, AI bias stems from flawed training data, inadequate preprocessing, and homogeneous development teams—issues that directly impact redaction accuracy across different demographic groups.

Algorithmic bias represents perhaps the most insidious threat. Studies examining bias in AI systems show that machine learning models can produce systematically prejudiced results when trained on historical data that contains embedded societal biases. In redaction workflows, this might manifest as inconsistent performance across documents containing different name origins or cultural contexts—potentially exposing some individuals while protecting others.

Over-redaction creates a different problem: hiding non-sensitive information that stakeholders actually need to see. This typically occurs when organizations implement overly conservative AI settings out of fear, resulting in documents so heavily redacted they become useless. One legal team discovered their AI tool was flagging every date as potentially sensitive, rendering contract timelines completely unreadable.

Under-redaction poses the gravest risk—leaving sensitive data exposed. Real-world examples of AI bias demonstrate how AI systems can miss critical information when it appears in unexpected formats or contexts. A single overlooked Social Security number can trigger massive compliance violations and identity theft.

Mitigation strategies require multi-layered approaches. Start by implementing diverse training datasets that represent your actual document types. Modern solutions like Smallpdf's Redact PDF tool provide secure, straightforward interfaces with TLS encryption and GDPR compliance, ensuring redacted information cannot be recovered. Establish continuous monitoring protocols with human-in-the-loop review processes for high-stakes documents. Most importantly, conduct regular bias audits using test documents containing diverse name types, formats, and demographic indicators to verify consistent performance across all user groups.

Measuring Success: KPIs and Auditing Practices for Ethical Redaction

Building an ethical AI redaction system isn't a "set it and forget it" endeavor—it requires continuous monitoring and measurement. Think of it like maintaining system uptime: according to Automated AI bias detection without manual assessments, AI fairness requires always-on observability, not yearly checkups. Organizations that embed continuous monitoring into their daily operations transform compliance from a burden into a strategic advantage.

Essential Fairness and Accuracy Metrics

Start by implementing quantifiable fairness metrics that measure whether your AI performs equitably across different demographic groups. AI Evaluation Metrics - Bias & Fairness emphasizes that fairness metrics aim to quantify whether AI models treat all individuals equally, regardless of characteristics like age, gender, or socioeconomic status. Track accuracy rates across document types, monitor false positive/negative redaction rates, and measure consistency in how sensitive information is identified and protected across different user groups.

Establishing Continuous Audit Readiness

Modern ethical redaction requires what Continuous audit readiness across frameworks in 2025 calls an "always-on state" where compliance posture is maintained in real time. Leverage audit management software that automates compliance checks and continuously captures relevant data. For practical implementation, platforms like Smallpdf's Redact PDF tool provide built-in audit logging with TLS encryption and GDPR compliance, ensuring every redaction action creates an unbroken documentation chain.

Building Incident Response Systems

Establish clear incident reporting workflows that catch policy violations before they escalate. Enterprise AI Governance: Complete Implementation Guide recommends monitoring and analytics for usage tracking combined with governance workflow systems for risk assessments. Create feedback loops where your team can flag questionable redaction decisions, investigate root causes, and implement corrective actions within 48 hours.

Conclusion: Building Trust Through Transparent and Accountable Redaction

Building trust in AI-powered redaction isn't optional anymore—it's the foundation of sustainable compliance and operational excellence. Your organization's credibility depends on implementing systems that don't just hide sensitive information, but can explain why specific choices were made and prove they're consistently fair across all demographic groups.

Start by conducting a bias audit of your current redaction workflow, establishing clear governance policies, and implementing continuous monitoring systems that catch issues before they escalate. Document everything—from training data sources to model validation protocols—because regulatory frameworks like the EU AI Act now mandate this transparency for high-risk AI systems.

| Priority Action | Timeline | Key Benefit | |----------------|----------|-------------| | Conduct initial bias audit | Week 1-2 | Identify existing fairness gaps | | Establish governance team | Week 3-4 | Create accountability structure | | Implement monitoring tools | Month 2 | Enable continuous compliance | | Document all processes | Ongoing | Build legal defensibility |

For practical implementation that combines security with explainability, tools like Smallpdf's Redact PDF provide TLS encryption and GDPR compliance while ensuring every redaction creates a clear audit trail. The question isn't whether to prioritize ethical AI—it's whether you're ready to lead with transparency or react to regulatory penalties. Start your audit today.